A Brief Overview

It is an old adage which quite holds true even today – avoid putting all eggs in one basket. With Cloud computing gaining traction on account of cost efficiencies broadly, and organizations having distinct work silos, for instance, development, testing, production and support, it’s sensible to deploy these in separate cloud infrastructure to avoid service disruption. A multi-Cloud Strategy clearly lets organizations hedge risks in service breakdowns. One sample scenario could be deployments such as SaaS and PaaS on Amazon Web Services and Microsoft Azure respectively.

The Market at Large

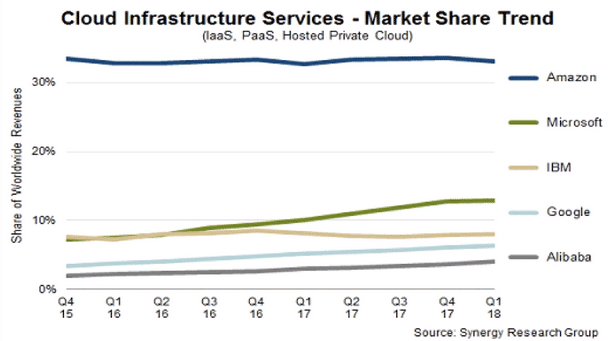

The presence of many players in the cloud infrastructure market segment is undoubtedly a key requirement for a multi-cloud paradigm; and many players have already filled this space such as Amazon, Microsoft, IBM, Alibaba, Google and few more. A peep into the market share trends of these players (see figure below), clearly indicates their respective position therein.

Even though Amazon appears to have a clear lead against its competitors, the presence of multiple players itself is a good reason to consider adopting more than one cloud. It has been observed that even Amazon users are either adopting or keen to adopt a +1 model, where +1 could either be a public cloud as in Microsoft Azure or Google or in some cases an on-premise data centre. This draws a clear conclusion that deployments are either a blend of public+public or public+private clouds.

Needless to mention, the presence of multiple players is giving Amazon tough competition in its efforts to retain pole position in the market.

According to IDC FutureScape: Worldwide Cloud 2017 Predictions report, more than 85% of IT-enterprises will have committed themselves to multi-cloud architectures by 2018. Although somewhat old statistics, still relevant in driving confidence regarding the intention of organizations to allocate budgets towards multi-cloud purchases and hence keep the market buoyant and competitive. The recent spate of cloud-native applications being churned out by enterprises is the key driver for evaluating cloud vendors, as also the choice to host it on more than one cloud.

Before moving into the next section to understand the reasoning and utility of multi-cloud adoptions, it will be good to remind us that cloud-based applications are characteristic of a portable software stack, DevOps driven, free from vendor lock-ins and above all capable of delivering capabilities available across cloud vendors.

Rationale and Benefits

A quick look into the benefits of multi-cloud architectures will clearly justify its rationale. Let’s begin with a common benefit.

-

Reduces the risk of service breakdowns:

Applications have since moved from high availability to continuous availability. With cloud among other things being a cost-effective hosting option, application deployments that straddle across multiple clouds can enjoy the strengths afforded by redundancy. Cloud architects are now able to infuse disaster recovery in their application blueprints by shifting transactions or impacted services to the other cloud. Taking this a little further, some experts even allege that multi-cloud architectures clearly bring down the risk of DDoS attacks wherein a mitigation strategy can enjoy the advantages of redundancy as already stated. Off late investments seeking redundancy are no longer questioned, instead approved. -

Reduces the risk of pricing:

A competitive market unquestionably affords customers the power to bargain. This holds true for cloud purchases as well. Although pricing is determined by various dimensions, for instance, time (minute, hour), enterprises get a clear picture of the total cost spanning multi-cloud and match them with benefits available therein. Competitive pricing gives the advantage of balancing the cost of running multi cloud applications or features against their allocated budgets. Since portability is a clear essential to take advantage of competitive pricing, cloud architects must include this in their application blueprints. -

Empowers architects to choose across the spectrum:

It has been observed that architects usually ask for freedom to choose all technology components of their choice in building an application. The common situation although is that the choice of few of those components is determined by the corresponding Enterprise-IT team eg, Oracle as the data store and middleware. Since cloud environments are differentiated by their capabilities and pricing, architects prefer to have the freedom to choose rather than be dictated by their Enterprise-IT team. Also that when some clouds are region specific, the freedom to choose by architects is even more justified to get the best from them.

The use-case library

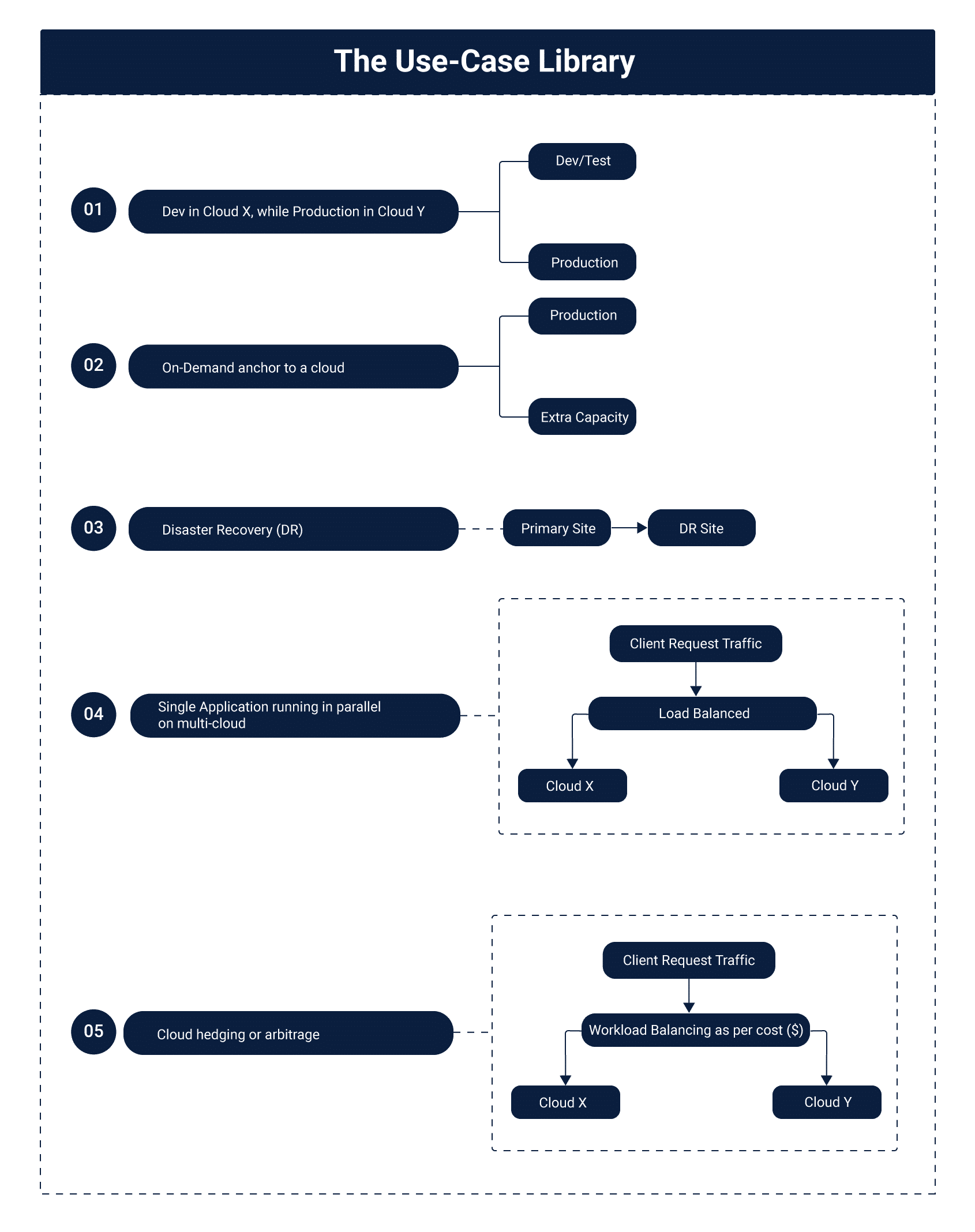

It’s quite evident that multi-cloud is a spectrum and its implementation models depend upon the skills of the cloud architect. Still, few cloud models have evolved and are available for instant reference. These, however, have been challenged by the complexities thrown at them on account of enterprises transitioning to distributed containerized applications. Lets first dive into the ready-made implementation models; then understand the complexities thrown at them by containers.

-

Dev in Cloud X, while Production in Cloud Y:

This is a very common use case and initially driven by a conservative and low-risk appetite mindset. That use the cloud to run development and testing, and host production on in-premise infrastructure. While this model is common, still flipped sometimes. It makes more sense to run testing on in-premise, more predictable, co-located VMs than pay hourly for Amazon VMs. Production environments, however, demand multi-region abilities and advanced features of CloudFront CDN more likely available with a cloud vendor.

-

On-Demand anchor to a cloud:

Another popular implementation model amongst enterprises that truly exploits one key feature afforded by cloud viz elasticity. Here production runs on in-premise infrastructure, and whenever peak times demand additional capacity, it spins to the cloud. This is also known as cloud bursting. This strategy avoids investing in additional hardware to meet peak time demands which are usually short-lived leading to underutilization of investments.

-

Disaster Recovery (DR):

This is a natural advantage afforded by redundancy in multi-cloud architectures. It’s a clean implementation model where production runs either on one cloud or an on-premise data centre. An exact copy is however replicated on another cloud which is the fallback option and an enabler for disaster recovery. A common pitfall to avoid would be relying on a single cloud provider to host both the production as well as a copy of it. It is usual for recovery mechanisms to have a slim window in terms of mean-time-to-recover, driving the notion of a wafer-thin data loss. Cloud DR strategy is no exception. Recovery Point Failure (RPO) is a measure of the maximum data loss that enterprises are willing to bear in the event of failure. This could typically stretch to hours, even days.

-

Single Application running in parallel on multi-cloud:

This implementation model is a clear deviation from those mentioned above; in the sense that while the above models run applications distinctly in each cloud and have a well-defined purpose, this model parallely runs the same application on multi-cloud environments, thus bringing in the benefits of load balancing. One can find many references to this model in cloud literature where enterprises have reported that running an active-active cloud implementation model has afforded them greater strengths in dealing with DNS DDoS attacks, even a regional or global failure.

Though it can occur in our minds that this implementation model is quite similar to the DR model, where the DR site is available in case the primary site goes offline. We should remind ourselves that although in the case of failover to a DR site, even though it is believed that RPO theoretically is zero, but still can have a slim margin of data loss as found in traditional DRs.

-

Cloud hedging or arbitrage:

Quite a holy grail in multi-cloud discussions, but at present the least commonly implemented model. The central idea seems to have been drawn from the previous model; where workloads are distributed across clouds based upon their current load. In this model, workloads are distributed across clouds based on the cost of running them. This, of course, is dynamically determined.

It is believed though that as tools like Kubernetes improve, enterprises may begin to view this model a bit seriously and choose arbitrage based upon the need for variable compute resources by the respective applications.

All said and done, before selecting any implementation model, enterprises need to unequivocally invest in a container management platform eg Kubernetes and a data management solution. While the former will act as a deployment enabler, the latter will smoothly move data between containers hosted on multi-cloud environments. In DR environments the justification for data management solution is implicit. Further, enterprises will naturally be keen to move data between containers in a stateful manner even if it means losing a small workload during transfer.

Challenges and workarounds

Given the advantages of multi-cloud architectures and with tech-savvy enterprises adopting it to harvest value from investments, these do not, however, come without their share of challenges. With challenges, there are workarounds too! Let’s dive into a select few!

-

Umbrella Management Consoles

When an application straddles across cloud providers, each with its unique management console, it creates undesirable management complexities. These are further accentuated by each provider having their own processes to deal with management issues. A consolidated management console will put most complexities at rest on account of process uniformity. While finding one is a task, it still may not subvert the need for a cloud-vendor specific monitoring console to apply patches or infuse advanced configuration.

- Cloud technology skill gap

The increased adoption of cloud technologies is creating a huge demand for professionals with the right skill set for roles as developers, testers, architects and administrators. This report states that AWS certified folks can command a 28% higher salary compared to their peers. A large scale L&D exercise to reskill and ramp-up existing workforce with the latest cloud technologies, or outsource select functions to a managed service provider with multi-cloud expertise, is a clear choice to deal with this challenge.

- Inconsistent application hosting

This is probably true for large organizations where many consumer groups are hosting applications simultaneously on multiple clouds. In case one consumer group has a penchant for a particular cloud provider for certain workloads, and if this choice is not consistent across the organization, then aggregate costs can spiral leading to breaks in cost efficiency. An inappropriate instance could be the hosting of the same application on multiple clouds. Read this advisory from the Tech Republic for a solution.

-

Migration

This is an area driven by experience and expert judgement. Moving from say a hybrid to a multi-cloud environment demands quite a comparison to determine the value proposition. Then the need for automated deployment engines that do the job of migration quite transparently and as one atomic action, rather than one deployment at a time.

-

Compliance

This is a topic taken seriously by all enterprises and extends its reach in almost all business functions. Few of them are quite prominent in most literature as HIPAA, FISMA, PCI DSS and SOX. Cloud vendors too are game to these. Hence, enterprises have to ensure that cloud vendors are standards compliant before entrusting them with their multi-cloud architectures.

-

Security

Any discussion on multi-cloud is incomplete without addressing the issue of cloud security. It’s not only psychological but logical too that the apprehension regarding security comes to the fore, especially when workloads are being entrusted to external cloud service providers. A detailed discussion on security-related SLAs is imperative with both remediation and penalties duly built-in, whenever data breaches or security-related violations occur. In addition, enterprise-IT must negotiate with consumer groups within the organization to follow due processes to acquire cloud resources. This will avoid disasters on account of data security related evaluation oversights.

-

Billing Complexities

This is one area that induces monotony with regard to billing in multi-cloud environments. The reason is the number of variables that influence billing in respect of each cloud vendor. These could be pricing models, size of VMs, data ingress/egress, fees and so on. This multi-cloud vendor billing sprawl can make finance guys go crazy in auditing costs. A managed multi-cloud provider is a good choice to streamline billing and make cost analysis easy and straightforward.

It seems evident as if the discussion on driving adoption of the cloud itself is passed, given its imperatives. The narrative now seems to have shifted to it being used as an enabler for larger business objectives such as continuous availability, cost efficiency and enhanced functionality on account of freedom to choose from a spectrum of cloud providers. A multi-cloud architecture seems to be an obvious fit here, even though it comes with its share of challenges. The good news is that each has some workaround available. Hence, a sound multi-cloud strategy is essential to reap benefits from cloud investments.

Must Reads:[wcp-carousel id=”10006″]